Is artificial intelligence (un)biased?

Whether artificial intelligence (AI) is biased or not is mostly determined by its underlying ethics. By definition, ethics is the philosophical reflection of justifiable moral validity claims. This means that there are a few crucial questions that are examined, such as: How should we live? Which purposes and values guide our actions?

When working on AI and machine learning, the goal and the focus of the developer have to be clear. What are the values for the AI? What is its purpose? Do you want it to strive for accuracy, efficiency, fairness, equality, privacy or transparency? The answers are not universal. For example, when doing medical tests, the goal should be accuracy. When only wanting a quick overview or preselection then efficiency might be the goal.

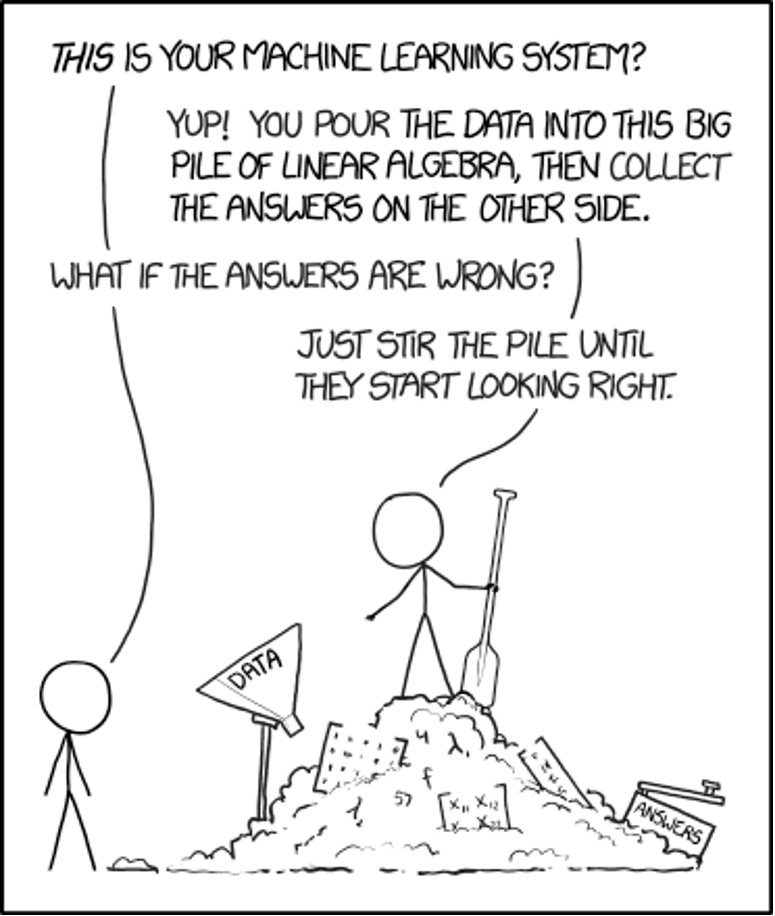

One thing that must not be forgotten is that behind every AI there is a human that did the programming. This human has some responsibilities in asking him or herself those questions about ethics. However, for many scientists who just want to innovate, these questions are of secondary nature. It is therefore crucial to have instances that check for biases and ask those ethical questions. In general, one could say that if results obtained by AI are very surprising then it might be better to check again whether the algorithm is really doing what you set out to.

In summer of 2020 ethics were not considered appropriately by the British government. Because of the pandemic students could not take their GCSE exams, which determines what university they can apply to. Instead of considering the teachers marks and counting these, they decided to implement an AI that calculated an estimated score based on past performance. However, not the students’ past performance was looked at rather than the neighborhoods. So the AI took into account where the students lived and downgraded some because of the postcode of their poor neighborhood they were living in. This could block their access to competitive top universities based on the programming of the AI and not because of their academic performance. The whole ordeal led to a massive media scandal and eventually to the government apologizing and not using this grading AI.

This is however not the only instance where the full scope of how the AI was trained was not taken into account. Programmers have to be vigilant as not to include any biases into their AI. For example, Amazon created an AI to sort through CVs of job applicants to find the people that fit the company best and have the highest chance to succeed. They wanted the AI to be unbiased and removed the gender variable. However, the AI still chose more men than women. How come? Because the CVs that the AI used to learn were mostly written by men, since the tech industry is very male dominated. Those CVs contained more typically male interests like having played football or baseball and not many containing typically female interests like ballet for example. Therefore, the gender was still hidden in other variables in the CV and the AI continually contributed to gender inequality.

These are not the only examples in which some inadvertent discrimination happened. Most facial recognition software was trained on white people and then had trouble recognizing black or Asian people.

So how do we avoid such mistakes? One of the main takeaways when talking about ethics and AI is that diversity is key. No matter the situation or area, in a diverse team many such issues are uncovered early on and can be corrected.

In my opinion it is crucial that everyone who comes in contact with such systems remains inquisitive and vigilant. The sentence of “we should want to have AI behave our best and not copy our worst” really resonates with me. Because only if we, the people behind the AI, ask all the ethical questions, only then can we get rid of biases.

Author: Noemi Moeschlin

Image Source Title: https://espialresearch.files.wordpress.com/2019/06/ai-ethics-banner.jpg