Artificial intelligence and self-driving cars

Driving a vehicle is not an easy task. It is more than just going forward, left or right, the estimation of speed. Also, the behavior of other drivers must be foreseen. Road conditions must be considered. All this in real time. For a human It takes time to learn all these necessary skills. However, matter how much we practice something, no matter how good we are, humans will always make mistakes. Every 23 seconds a road user dies in a car accident. That is 3619 deaths every day.

Driving a car gives us freedom. Freedom in the way that we can travel long distances in a short amount of time. A short amount compared to traveling the same distance by foot or by horse. It allows humans to live a connected life. Having an own car is for some people the epitome of progress, self-realization, and self-expression. Why otherwise is one of things we look forward to the most when turning 18 to learn to drive?

The freedom provided by an individual car is also bigger compared to travelling with public transportation. With trains and busses, you don’t get to decide the schedule. You cannot decide which road/path to take. This often results in time loss and inefficiency compared to using a car. It results in a loss of self-determination.

3610 road deaths per day is the prize for this freedom. A high price to pay. A price that we as individuals and as a society are obviously willing to pay.

Fully autonomous self-driving cars, meaning cars that travel from A to B based on artificial intelligence systems without human intervention other than providing the destination, are a possible solution for the risk of using a car as means of transportation.

However, many people are skeptical about this idea. Perhaps because we don’t understand it. Why should we give up driving, a task and achievement we are so proud of and hand it over to computers that we don’t trust?

So what’s the deal with these self-driving cars? How do they work? Will it actually be possible that we don’t drive our cars ourselves anymore in the near future’?

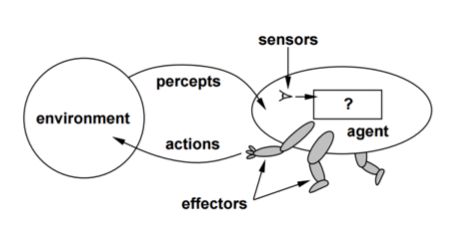

To understand self-driving cars, we must first understand artificial intelligence. One possible simple explanation for that is shown in the diagram below. It describes a system that exists in an environment with a set of sensors. It records the sensors data, processes them, described by the question mark, and finally trough the set of effectors acts in the environment. In case of a car these effectors would be the steering wheel, the wheels and so on.

The processing of the data is done by a so-called neural network. The building blocks of a neural network are the neurons. A neuron takes a set of inputs, puts a weight on them, sums the inputs together, applies a bias and then uses an activation function to produce a zero to one signal.

If the output of the neuron does not match the ground truth, the neuron gets corrected by changing the applied weights. This process is repeated until the neuron makes no more mistakes. The output can for example be used to make a classification or a decision that results in an action.

The tasks or actions of a self-driving car can be broken down into modules: Where am I? Where is everyone else? How do I get from A to B? What is the driver doing?

The data to make these decisions is provided by sensors. The sensors used for semi-autonomous cars are often vision based. For example, a combination of camera, a radar and ultrasonic, like Tesla is using. Fully autonomous cars like the one from Waymo use the combination of lidar and maps. Both systems have advantages and disadvantages and bring their challenges.

One of these challenges is bad weather. Like human eyes car sensors don’t work as well in fog, rain or when it is snowing. The industry is approaching this challenge by gaining confidence in the system in locations with good weather before moving on to regions with bad weather.

But self-driving cars have more challenges than just choosing which sensor system to rely on. A far more difficult hurdle is the fact that driving is a social process that requires complicated interactions. In many of those situations’ humans rely on generalized intelligence and common sense, two things that robots still lack. A possible example situation could be the following: A self-driving car recognizes a situation of an occurring accident with another car coming from the opposite direction. A fast correction of the driving direction is needed to avoid the accident. On the left is a sidewalk with people walking. Driving to the left would therefore possibly hurt other people but protect the driver. On the right side are trees. Driving in this direction would possibly kill the driver but protect the people on the sidewalk. To let AI make a decision in such a situation is difficult and due to deep learning algorithms also not always predictable.

Another obstacle is the regulatory approval. The key point in question will be safety. Human drivers get into a fatal accident at a rate of every 100 million miles driven. Ideally self-driving cars are just as safe.

By 2019 the Tesla Autopilot has reached 1 billion miles with three fatal accidents. This would suggest a three-time higher safety compared to human drivers. But to make a statistical conclusion many billion more miles with different systems of self-driving-cars must be driven.

The chances are high that once the industry is ready to sell self-driving cars, regulators will have to make decisions or provide a framework that allows to deal with this uncertainty.

But when will the industry be ready? Car manufacturers like Nissan, Honda, Toyota, BMW, and others are currently testing fully autonomous vehicles. Some already have semi-autonomous cars on the market like Tesla.

Tesla founder Elon musk said in February 2017: “My guess is that in probably 10 years it will be very unusual for cars to be built that are not fully autonomous.”

Rodney Brooks, former director of the Computer Science and Artificial Intelligence Laboratory at the Massachusetts Institute of Technology (MIT) is predicting: “2031: A Major city bans cars from a non-trivial portion of a city and around 2045 will a majority of US cities ban manually driven cars with drivers from a non-trivial portion of a city”.

It will take a breakthrough of at least one project that makes it through the prototype and testing phase. However, only when a meaningful deployment of a self-driving car is reached humans can gain trust in the technology and adapt to it. I think the real challenge of the automotive industry will not just be to invent the technology for self-driving cars, but to get humans to drive them. Very likely the technology will be ready long before it will be used.

Author: Frank Esslinger

Image Source Title: https://www.forbes.com/sites/forbestechcouncil/2021/08/31/14-tech-experts-predict-exciting-future-features-of-driverless-cars/

References:

http://aima.cs.berkeley.edu/

https://www.vox.com/2016/4/21/11447838/self-driving-cars-challenges-obstacles

https://www.rand.org/pubs/research_reports/RR1478.html

https://www.nytimes.com/interactive/2018/03/20/us/self-driving-uber-pedestrian-killed.html

https://www.theverge.com/2017/10/18/16491052/velodyne-lidar-mapping-self-driving-car-david-hall-interview

https://www.youtube.com/watch?v=URi4raUe2Cs